Introduction

For PowerCLI tip part 3, it will be going through advanced reporting using ESXCLI.

What’s ESXCLI?

I suggest you to go and read the following blogs:

- vSphere 5.0 esxcli by Duncan Epping

- What is esxcli by William Lam

- vSphere 5.0 Documentation

Why ESXCLI For Reporting?

PowerCLI itself already has most of the functions to generate a report. However, there are some ESXCLI commands that could be used to produce a report in a faster and easier way. This will be discussed in the later sections with specific examples (scenarios).

In this blog post, there will be two examples discussed:

- Storage

- Network & Firewall

Preparation

Before starting, one of the advantages of running ESXCLI through PowerCLI is that SSH does not have to be enabled. But, it requires certain roles so might as well run it with Read-Only account and add permissions accordingly.

First of all, let’s run and save ESXCLI to a variable $esxcli = Get-VMHost -Name “ESXi” | Get-ESXCLi. Then, calling $esxcli will output the following:

PowerCLI C:\> $esxcli =============================== EsxCli: esx01.test.com Elements: --------- device esxcli fcoe graphics hardware iscsi network sched software storage system vm vsan

The output looks very similar to running ESXCLI on ESXi shell. The difference is, for example, if you want to call storage object, then you run $esxcli.storage. No space in between, i.e. esxcli storage.

With the preparation work above, we are ready to go through some examples! 🙂

Storage

NOTE: For the report below, I am assuming the virtual disks from storage array are mapped to all ESXi servers in a cluster (Well I guess this is usual for most of people to benefit from HA/DRS).

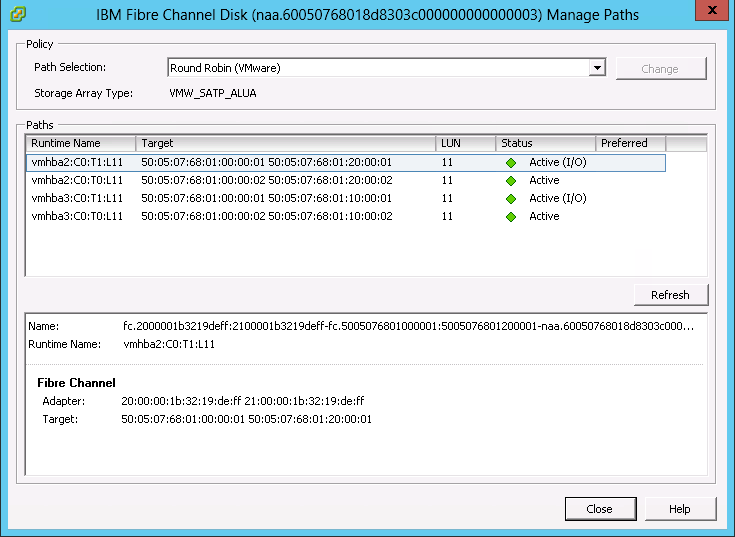

Looking at above screenshot, the report should contain:

- Cluster

- Adapter, e.g. vmhba2 or vmhba3

- Device, i.e. UID

- TargetIdentifier, e.g. 50:05:07……

- RuntimeName, e.g. C0:T1:L11

- LUN, e.g. 11

- State, e.g. Active

Using ESXCLI, it could be achieved quite simply. Assuming you already have saved ESXCLI value to a variable $esxcli, save the following to variables accordingly:

- $esxcli.storage.core.path.list()

- It outputs the list of all paths of storage devices attached to this ESXi server.

- $esxcli.storage.core.device.list()

- It outputs the list of all storage devices attached to this ESXi server.

Then, using the device list, filter it to query only Fibre Channel devices and for each of them, if the list of path match to this device, select elements.

Combining above it becomes:

$path_list = $esxcli.storage.core.path.list()

$device_list = $esxcli.storage.core.device.list()

$vmfs_list = $esxcli.storage.vmfs.extent.list()

$cluster = Get-Cluster -VMHost (Get-VMHost -Name $esxcli.system.hostname.get().FullyQualifiedDomainName)

$device_list | where {$_.DisplayName -match "Fibre Channel"} | ForEach-Object { $device = $_.Device; $path_list | where {$_.device -match $device} | select @{N=“Cluster”;E={$cluster.Name}}, Adapter, Device, TargetIdentifier, RuntimeName, LUN, State }

Example Output:

Cluster : Development

Adapter : vmhba3

Device : naa.60050768018d8303c000000000000003

TargetIdentifier : fc.5005076801000002:5005076801100002

RuntimeName : vmhba3:C0:T0:L11

LUN : 11

State : active

Cluster : Development

Adapter : vmhba3

Device : naa.60050768018d8303c000000000000003

TargetIdentifier : fc.5005076801000001:5005076801100001

RuntimeName : vmhba3:C0:T1:L11

LUN : 11

State : active

Cluster : Development

Adapter : vmhba2

Device : naa.60050768018d8303c000000000000003

TargetIdentifier : fc.5005076801000002:5005076801200002

RuntimeName : vmhba2:C0:T0:L11

LUN : 11

State : active

Cluster : Development

Adapter : vmhba2

Device : naa.60050768018d8303c000000000000003

TargetIdentifier : fc.5005076801000001:5005076801200001

RuntimeName : vmhba2:C0:T1:L11

LUN : 11

State : active

Quite easy, isn’t it?

Another example: virtualisation team manager asked for virtual disks (FC type) that are attached to ESXi servers but not formatted as VMFS. To make it more specific, he was expecting the following:

- Cluster

- Device

- Device file system path

- Display Name

- Size

With the report above, it would be very handy to identify which virtual disks are being wasted.

- $esxcli.storage.core.path.list()

- It outputs the list of all paths of storage devices attached to this ESXi server.

- $esxcli.storage.vmfs.extent.list()

- It outputs the list of all storage devices partitioned (formatted) with VMFS volumes attached to this ESXi server.

Using device list, run a where filter to:

- Make sure this device is not formatted as VMFS

- I used -match against all VMFS volumes joined by | which means or

- The type is Fibre Channel

Combining above, it will become:

$device_list = $esxcli.storage.core.device.list()

$vmfs_list = $esxcli.storage.vmfs.extent.list()

$cluster = Get-Cluster -VMHost (Get-VMHost -Name $esxcli.system.hostname.get().FullyQualifiedDomainName)

$device_list | where {$_.Device -notmatch ([string]::Join("|", $vmfs_list.DeviceName)) -and $_.DisplayName -match "Fibre Channel" } | select @{N="Cluster";E={$cluster.Name}}, Device, DevfsPath, DisplayName, @{N="Size (GB)";E={$_.Size / 1024}}

Example Attached:

Cluster : Development

Device : naa.60050768018d8303c000000000000006

DevfsPath : /vmfs/devices/disks/naa.60050768018d8303c000000000000006

DisplayName : IBM Fibre Channel Disk (naa.60050768018d8303c000000000000006)

Size (GB) : 128

Network

In this Network section, I will be giving two examples with:

- Firewall

- LACP

Let’s start with Firewall.

One of the VMware administrators deployed vRealize Log-Insight and before configuring ESXi servers to point to Log-Insight, he wanted to check the allowed IP addresses configured before and remove them in advance. It was configured to restrict access to syslog server for security purpose.

This time, it will be using $esxcli.network.firewall command. First of all, save the list of ruleset with allowedIP addresses:

- $esxcli.network.firewall.ruleset.allowedip.list()

Then, use the filter to query only syslog service. Combining above:

$esxi= $esxcli.system.hostname.get().FullyQualifiedDomainName

$ruleset_list = $esxcli.network.firewall.ruleset.allowedip.list()

$ruleset_list | where {$_.ruleset -eq "syslog"} | select @{N="ESXi";E={$esxi}}, Ruleset, AllowedIPAddresses

Example output:

ESXi : esx01.test.com

Ruleset : syslog

AllowedIPAddresses : {10.10.1.10}

Another example: network team wanted an output from ESXi servers to check the following:

- Check the status of LACP DUs, i.e. transmit/receive and see if there are any errors

- Check LACP configuration, especially the LACP period. Either fast or slow

I wrote an article about Advanced LACP Configuration using ESXCLI, I suggest you to read it if not familiar with LACP configuration on ESXi.

Similar to above, save the LACP stats to a variable and select the following:

- Name of ESXi

- Name of dvSwitch

- NIC, e.g. vmnic0

- Receive errors

- Received LACPDUs

- Transmit errors

- Transmitted LACPDUs

And the script would be:

$esxi= $esxcli.system.hostname.get().FullyQualifiedDomainName

$lacp_stats = $esxcli.network.vswitch.dvs.vmware.lacp.stats.get()

$lacp_stats | select @{N="ESXi";E={$esxi}}, DVSwitch, NIC, RxErrors, RxLACPDUs, TxErrors, TxLACPDUs

Example Output:

ESXi : esx01.test.com

DVSwitch : dvSwitch_Test

NIC : vmnic1

RxErrors : 0

RxLACPDUs : 556096

TxErrors : 0

TxLACPDUs : 555296

ESXi : esx01.test.com

DVSwitch : dvSwitch_Test

NIC : vmnic0

RxErrors : 0

RxLACPDUs : 556096

TxErrors : 0

TxLACPDUs : 555296

For the configuration report, you might be interested in Fast/Slow LACP period as mentioned above.

Similarly, save the LACP status output to a variable. Then for each object pointing to NicList, select the following:

- Name of ESXi server

- Name of dvSwitch

- Status of LACP

- NIC, e.g. vmnic0

- Flag Description

- Flags

Combining above:

$esxi= $esxcli.system.hostname.get().FullyQualifiedDomainName

$information = $esxcli.network.vswitch.dvs.vmware.lacp.status.get()

$information.NicList | ForEach-Object { $_ | Select @{N="ESXi";E={$esxi}}, @{N="dvSwitch";E={$information.dvSwitch}}, @{N="LACP Status";E={$information.Mode}}, Nic, @{N="Flag Description";E={$information.Flags}}, @{N="Flags";E={$_.PartnerInformation.Flags}} }

Example Output:

ESXi : esx01.test.com

dvSwitch : dvSwitch_Test

LACP Status : Active

Nic : vmnic1

Flag Description : {S - Device is sending Slow LACPDUs, F - Device is sending fast LACPDUs, A - Device is in active mode, P - Device is in passive mode}

Flags : SA

ESXi: esx01.test.com

dvSwitch : dvSwitch_Test

LACP Status : Active

Nic : vmnic0

Flag Description : {S - Device is sending Slow LACPDUs, F - Device is sending fast LACPDUs, A - Device is in active mode, P - Device is in passive mode}

Flags : SA

With the report above, network team could find out which ESXi server is configured with Fast or Slow so that they could configure the LACP accordingly (LACP period mis-match is not good!).

Wrap-Up

In this blog post, it discussed the way of using ESXCLI command to generate an advanced report. I didn’t go through properties deeply as I discussed in Part 2 and you could slowly take a look properties on your own.

Hope it was easy enough to follow and understand. On the next series, I will be discussing how to use PLINK to generate a combined report with ESXi and non ESXi.

Always welcome for for you to leave a reply for any questions or clarifications.